Summary: When designing information environments for better user experience, use information models that reflect human behavior and situations in context.

When designing information environments — whether it’s an app, a website, or a cross-channel service—it’s easy to fall into the trap of designing as if the only thing that matters are specific, concrete, linear tasks performed by a generic user. Sure, you may try using personas to establish some different archetypes for users, or some scenarios that describe tasks. But staying focused on the tasks alone can result in straight-jacketed, one-size-fits-all requirements that overlook important differences in the situations, needs, and personal make-up that drive user behavior. For that reason, I thought I’d share some basic models that I’ve found helpful for thinking through important areas of user context.

Humans Are Not Machines

About five years ago, I was working on a complex web service for a financial services company. I found myself, as usual, in long meetings with Business Analysts, folks from Engineering, and a few SMEs (subject-matter experts), who were looking askance at me, the “UX person,” and wondering why the guy who was going to make the UI was involved in these early sessions. And (again, as usual) I experienced the conventional approach to making enterprise software: gathering inputs to make into requirements, while creating “process models”—essentially schematics that describe human activity in a linear, decision-tree model that looks a lot like the sort of flowchart one uses for software or electrical circuitry. This is what happens when a process that emerged a generation ago—for creating software that allows machines to work with other machines—is put into service for creating software that allows machines to interface with people.

But people aren’t machines. They’re squishy, messy, overlappy, emotional creatures whose ability to understand and use stuff changes throughout their lives (or even throughout a given day).

In the midst of that project, I started pulling out the typical User Experience tropes about personas, scenarios and the like. These were helpful, but in this particular case I realized I was finding them lacking. Why? Because, when embedded in an IT team, so much of the work happening is heavily focused on user tasks—the actions that drive concrete requirements. In an Agile team, it’s often no different. User stories get written for very concrete, specific pieces of functionality, because the priority is on delivering working code at the end of a sprint.

I can’t place all the blame on IT culture, though. Even in UX circles, we’re often trained to look for tasks, and to design for supporting those tasks. But what situation triggered the interaction, and what needs arose that prompted the activity to begin with? Do users even know what task they’re supposed to be performing? How do they discover it? How does the task change in the face of learning as they go, discovering new needs, or the emotional turbulence of the situation they’re coming from?

Consider the Situation

A couple years earlier, I’d done some freelance work for a non-profit that provides a web environment for people affected by breast cancer: patients and their families, friends, and caregivers. One of the things we discovered during contextual research was that people’s needs shifted considerably from one moment to the next, depending on what phase they were experiencing. A patient who was recently diagnosed with cancer was often in a state of high emotional arousal—a near panic—and unable to comprehend complicated, multi-faceted information layouts or technical content. But within a short time, that same person tended to become an expert researcher, throwing herself (or himself) into a voracious “flow state,” learning everything possible. The previously hindered user was now able to handle a good deal more complexity and information variety. Some people went through such a cycle several times over the course of their diagnosis and treatment.

It was during that project when I realized we need better tools for figuring out the dimensions of the user: the “interior” context, so to speak. I also realized we needed a way to understand what situation gave rise to the tasks at hand. For example, a spouse of a newly diagnosed patient might be searching for similar terms, and reading similar content, but from a very different angle of need. Likewise, a patient might be performing Task A but be frustrated that Task B is so far removed from Task A, even though the context of the patient’s need means those tasks are highly related for that user, and should be situated more closely to one another.

So, later, during the financial services project, I sketched out a few quick blob-like diagrams to try working through these ideas with the team. They actually helped remind us to continually consider the situational factors within which the tasks were nested, and drove new requirements for help content, synonym links and other enhancements that improved the wider environment for the specific web application.

The models became tools that I’ve used ever since, in projects and in teaching. In the meantime, others have kindly borrowed and adapted them. (Example, Daniel Eizans, who referenced the personal/behavioral model in his writing and presentations on Content Strategy, and Erin Kissane for the mention in her book, The Elements of Content Strategy). Even though I’ve had these up in various forms on SlideShare for years now, I’ve never really explained them very well in article or post form. So here goes.

I’m not much for overly complex models and diagrams. So these may seem simplistic and obvious. But simple is good, in my view, because you can carry it around in your head. And simple is also good because you can combine many small, simple things to figure out a big, complex thing.

Personal-Behavioral Context

People are complicated. But even though people are complicated, software projects tend to treat them almost as an after-thought, even these days. I’ve sat in meetings like I described above, where many hours are spent by a group of highly paid people to figure out how System 1 will interface with System 2, both of which are well-documented platforms with APIs. Machinery made out of code, with interfaces that, while certainly complex, are made to connect with other interfaces in a fairly straightforward way.

But people aren’t like that. We aren’t made of industry-standard parts and documented, logical APIs. We’re nuanced animals who satisfice our way through the day, and who do most of what we do in a tacitly driven, mostly-passive cognitive state. While it’s true that we do some important things in an explicitly conscious manner, even those actions are heavily influenced by all the stuff going on in our bodies and brains that we hardly realize is there.

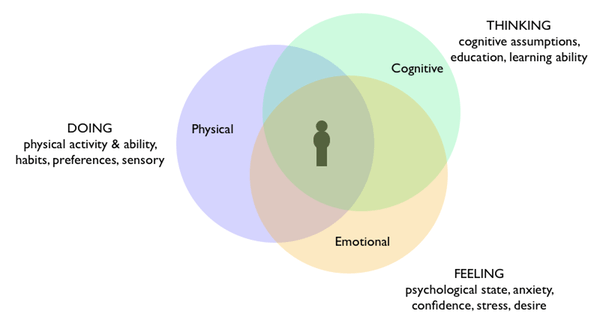

There are many factors that can affect user motivation, ability, and behavior. To keep things simple, I lumped them into three dimensions: Physical, Cognitive, Emotional.

Physical

This is about “doing.” What is the physical activity involved, from one interaction to the next? Is the user standing on a cold, loud, busy street where there’s a lot of glare from the sun overhead, trying to figure out how to pay for a parking space? Is the kiosk set so high up that someone of small stature or sitting in a chair can’t interact with the system? Does the layout of the interface meet the physical muscle-memory habits of the user? Is the application trying to interact with sound, even though the user tends to use the application in noisy environments?

Cognitive

This is about “thinking,” or what we tend to think of as the brain-centric stuff. Is this a highly educated person (either in general, or educated and experienced about the specific domain being addressed), or is this a “newbie”? Can we expect the typical user to be a quick learner who is motivated to pick up new interaction modes or iconographic cues? Is the user involved in a task that requires a lot of complex information to be juxtaposed at once, or is it more important to clear the decks and provide clarity and focus, one step at a time, even if it takes longer?

Emotional

This focuses on the psychological state the user is experiencing. As someone scared of a pending diagnosis, I may not be able to comprehend a huge index of possible topics—I may just need the ability to go into the “you’re waiting to find out” area where I can be quietly reassured. If I’m trying to quickly find a new connecting flight after my first leg arrived an hour late, I may not be in an emotional state that can handle “Recommended Tropical Destinations!” shoved into my face. If I’m angry about your system failure, I may not be receptive to cheeky status messages. These are as much an issue for functional considerations as they are for content choices.

To my mind, all three dimensions are what we should explore when we do something we consider a “persona.” Regardless of where we gathered the insights, or whether we make posters and placemats with charts and graphs and meticulously chosen stock-photo-people, these are the dimensions we should do our best to understand and get “under our skin.”

So, that’s the People side of the equation. What about the Activity side?

Personal-Situational Context

Many of the methods used in UX work tend to begin with discovering the tasks that users are trying to perform. Task Analysis, Mental Models, GOMS and other approaches focus on surveying the indigenous behaviors (or the users’ descriptions of those behaviors) and teasing out the concrete tasks, then designing the system to meet the needs of those tasks. Honestly, that’s a huge leap forward from the digital system design “practices of old,” which basically were “Make something that does X, Y, and Z in such a way that it doesn’t break the hardware, then train people how to use it.” But it’s time to move past the assumption that merely inventorying and describing the tasks is enough, or that most user activity is to meet an explicitly articulated “goal.”

For example, it’s still common to run across software (especially in enterprise settings) that tries to use a single interface for multiple, overlapping scenarios. Planning a birthday party? Here’s a form to fill out that helps you do so. Planning a funeral? Fill out the same form! Why? Because the entity is called “Event” in the entity-relationship diagram.

Tasks don’t live in a vacuum. Each task arises from some need in the background. And each need is triggered by some situation. For example, your spouse has a milestone birthday coming up, and you want to throw a big birthday party.

Situation: Spouse’s birthday is imminent, and you want to celebrate it with friends and family.

Needs: The situation spawns many needs, only one of which is “I need a good place to have a big party, since our condo isn’t very large.”

Tasks: The single need of “find a good party space” also spawns a number of tasks — researching event venues, comparing prices and services, contacting the venues, reservations, payment, etc.

By describing the originating situations and the various needs spawned by them, we bring a rich contextual understanding to the tasks—the concrete activities where people interact with the systems we create. It allows us to better organize and design those systems to accommodate the varieties of situational context and need that give rise to the activities to begin with.

In the financial services project, we were providing the ability for customers to make difficult decisions about giving over control of their finances, and at what level. It involved having to simplify complicated business rules into a few tiers—in a way that someone could understand even in emotionally charged situations, such as marriage, divorce or death.

For the breast cancer site, we realized each piece of content should be gauged along three dimensions—clinical, practical, and emotional—so it could be presented in contextually relevant moments.

Systems in Context

We tend to gravitate toward concrete tasks because they’re closer to the concreteness of the systems we’re focusing on making. But we have to always keep in mind that the artificial, binary, logical nature of the machinery can only roughly approximate the complexity of the organic, analog behaviors and needs of actual people.

Can we comprehensively cover every facet of every person? No. Can we fully understand and accommodate every dimension of originating situations and related needs? Certainly not. But that doesn’t mean we shouldn’t investigate these dimensions enough to gain at least a rudimentary understanding of the situational patterns, and the categories of need.

It sheds light on the tasks in such a way that we can better plan for different versions of functionality and varieties of interaction, and for meeting people a little bit closer to their lives, as they really live them.

PostScript:

If you’d like to make use of these diagrams please adapt as needed, but be a good sport and provide attribution to me (Andrew Hinton). Thanks!